Solar Radiation Monitoring Laboratory (SRML)#

The Solar Radiation Monitoring Laboratory (SRML) at the University of Oregon has been providing solar irradiance data for the Northeastern United States since 1975. The SRML monitoring station network consists of both high-quality stations that measure all three irradiance components at a 1-minute resolution, as well as stations with low-quality instruments that only log measurements hourly. A full list of the 42 stations (including discontinued stations) can be found at the SRML website.

Only the high-quality SRML stations are included in the SolarStations.Org’ station catalog; however, all active stations are shown below.

Help support the SRML

If you find the data useful, please consider donating to support the SRML.

| Station name | Abbreviation | State | Country | Latitude | Longitude | Elevation | Time period | Network | Owner | Comment | Data availability | Tier | Instrument | Components |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Loading ITables v2.2.2 from the init_notebook_mode cell...

(need help?) |

Data retrieval#

Data from the SRML stations are stored in monthly files for each station and can be freely downloaded from their website. The data can also be downloaded programmatically using the pvlib-python library, specifically the pvlib.iotools.get_srml function. If you use pvlib iotools for published work, please cite Jensen et al. (2023) which provides additional background information. A list of the station acronyms can be found here.

An example of how to use pvlib to download data from the Hermiston station for June 2020 is shown here:

import pvlib

data, meta = pvlib.iotools.get_srml(

station='HE',

start='2020-06-01',

end='2020-06-30',

url='http://solardata.uoregon.edu/download/Archive/')

show(data.head(), scrollX=True, scrollCollapse=True, paging=False, maxColumns=100, dom="tpr")

Show code cell output

/opt/hostedtoolcache/Python/3.12.12/x64/lib/python3.12/site-packages/pvlib/iotools/srml.py:239: FutureWarning: 'M' is deprecated and will be removed in a future version, please use 'ME' instead.

months = pd.date_range(

| ghi_0 | ghi_0_flag | dni_0 | dni_0_flag | dhi_3 | dhi_3_flag | ghi_2 | ghi_2_flag | dni_2 | dni_2_flag | dhi_2 | dhi_2_flag | temp_air_0 | temp_air_0_flag | ghi_1 | ghi_1_flag | dhi_1 | dhi_1_flag | temp_air_1 | temp_air_1_flag | relative_humidity_1 | relative_humidity_1_flag | 9151 | 9151_flag | wind_direction_1 | wind_direction_1_flag | wind_speed_1 | wind_speed_1_flag | dhi_0 | dhi_0_flag | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Loading ITables v2.2.2 from the init_notebook_mode cell...

(need help?) |

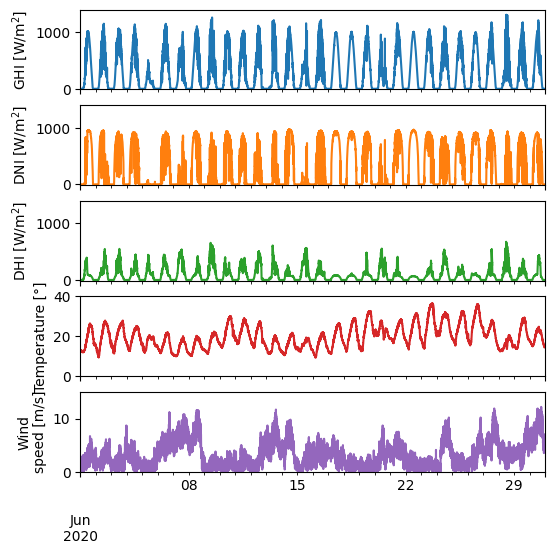

The data retrieved from the Hermiston station include measurements of the three irradiance components and additional weather parameters including temperature and humidity. A few of the parameters in the downloaded datasets are visualized below.

Show code cell source

axes = data[['ghi_0','dni_0','dhi_3','temp_air_1','wind_speed_1']].plot(

subplots=True, legend=False, rot=0, figsize=(6, 6), sharex=True)

# Set y-labels and y-limits

axes[0].set_ylabel('GHI [W/m$^2$]'), axes[0].set_ylim(-10,1400)

axes[1].set_ylabel('DNI [W/m$^2$]'), axes[1].set_ylim(-10,1400)

axes[2].set_ylabel('DHI [W/m$^2$]'), axes[2].set_ylim(-10,1400)

axes[3].set_ylabel('Temperature [°]'), axes[3].set_ylim(0,40)

_ = axes[4].set_ylabel('Wind\nspeed [m/s]'), axes[4].set_ylim(0,15)

References#

Adam R. Jensen, Kevin S. Anderson, William F. Holmgren, Mark A. Mikofski, Clifford W. Hansen, Leland J. Boeman, and Roel Loonen. Pvlib iotools—open-source python functions for seamless access to solar irradiance data. Solar Energy, 266:112092, 2023. doi: 10.1016/j.solener.2023.112092.